LibVF.IO: Commodity GPU Multiplexing Driven By VFIO & YAML.

Arthur Rasmusson (Co-Founder, Arc Compute)Read this article's comment section on news.ycombinator.com

1 Abstract

The following document describes the reasoning behind the creation of LibVF.IO, some basic definitions relating to GPU virtualization today, and a guide to using LibVF.IO for multiplexing vendor neutral commodity GPU devices via YAML.

2 Table of Contents

- Abstract

- Table of Contents

- Introduction

- What can I do with LibVF.IO?

- Quick Start

5.1 What you'll need

5.1.1 Host OS ISO (Ubuntu)

5.1.2 LibVF.IO

5.1.3 Windows 10 ISO

5.1.4 Host Mdev GPU Driver

5.2 Run the install script & reboot

5.3 Setting up your first VM

5.3.1 Install your Windows guest

5.3.2 Install the guest utils

5.3.3 Install the guest GPU driver

5.3.4 Run your VM - Definitions

6.1 VFIO

6.2 Mdev

6.3 IVSHMEM

6.4 KVMFR (Looking Glass)

6.5 NUMA Node

6.6 Application Binary Interface (ABI)

6.7 SR-IOV

6.8 GVT-g

6.9 Virgl - Contributing to LibVF.IO

- Related Projects & Resources

3 Introduction

Today if you want to run an operating system other than Windows but you'd like to take your Windows programs along with you you're not likely to have an entirely seamless experience.

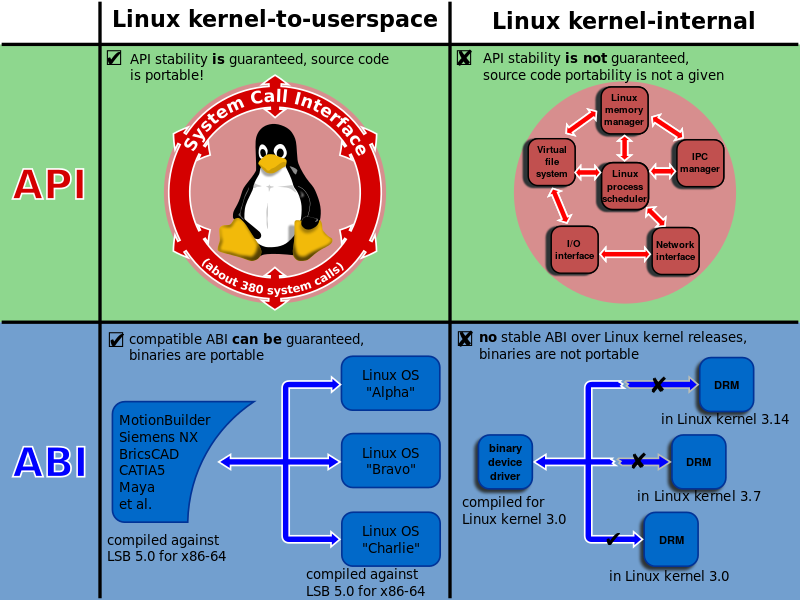

Tools like WINE and Valve's Proton + DXVK have provided a mechanism for Windows applications to run in MacOS and Linux environments. As the title would suggest WINE (Wine Is Not Emulation) is not an emulator, rather it provides an environment that approximates the Windows ABI (Application Binary Interface) to support Win32/Win64 applications in unsupported environments.

This approach has seen long adoption by Linux desktop users and has gained traction with the incorporation of official support in Valve's Steam game distribution platform however despite the vast energies of the WINE community across decades of work Microsoft still manages to introduce breaking changes to it's libraries and APIs which are often incorporated in newly released games causing either degraded application performance under WINE or entirely broken compatibility.

LibVF.IO addresses these problems by running real Windows in a virtual machine with native GPU performance. We do this by running an unmodified guest GPU driver with native hardware interfaces. This ensures that changes to Windows will not break or otherwise degrade compatibility with programs running under a compatibility layer such as WINE or Proton.

LibVF.IO is part of an ongoing effort to remedy architectural problems in operating systems today as detailed in this post which you can read here. We attempt to create a simplified mechanism with perfect forward compatibility for users to interact with binaries whose ABI (Application Binary Interface) is foreign to the host environment while retaining full performance. We will post more on this subject in the future.

4 What can I do with LibVF.IO?

This section will cover what you can do today using LibVF.IO.

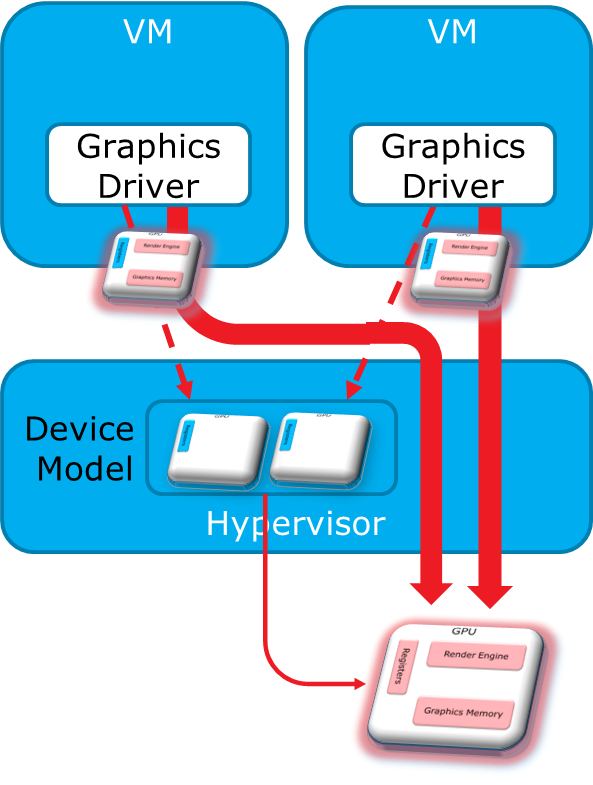

What are some of the current problems with VFIO on commodity GPU hardware?

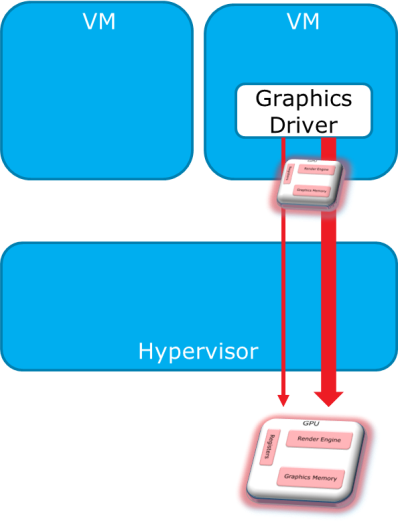

Today most VFIO functionality running on commodity GPU hardware involves full passthrough of a discrete physical GPU to a single virtual machine. This approach has proven to be a useful way for enthusiasts to run GPU accelerated virtual machines at home but with the obvious asterisk attached that both the host system and the guest virtual machine require their own discrete physical GPUs to work. This means that in order for this setup to work users must own two discrete physical GPUs both of which are installed in the same system which does not reflect the hardware a large number of computer users own today.

What problems does LibVF.IO solve?

LibVF.IO automates the creation and management of mediated devices (partitioned commodity GPUs shared by host & guest), identifying NUMA nodes, parsing / managing IOMMU devices, and allocating virtual functions to virtual machines.

5 Quick Start

The following section will provide an overview of getting started using LibVF.IO to create highly performant GPU passthrough'd virtual machines using a single graphics card.

5.1 What you'll need

Here we'll cover what you'll need to get up and running.

5.1.1 Host OS ISO (Ubuntu)

You'll need an installer ISO for the host operating system. Right now we're supporting several host OS images such as Debian, Ubuntu 21.04, Arch, and PopOS. This guide will cover installation of LibVF.IO on Ubuntu 21.04 Desktop. You can download the latest Ubuntu 21.04 Desktop ISO image here.

Once you've downloaded the ISO file you should create an installer USB. If you haven't installed an OS before using a USB and an ISO file tools like RUFUS on Windows or belenaEtcher on MacOS work great for applying the ISO boot image to the USB disk.

If you haven't installed a Linux operating system on your computer before here's a step by step guide to installing Ubuntu Desktop with screenshots.

5.1.2 LibVF.IO

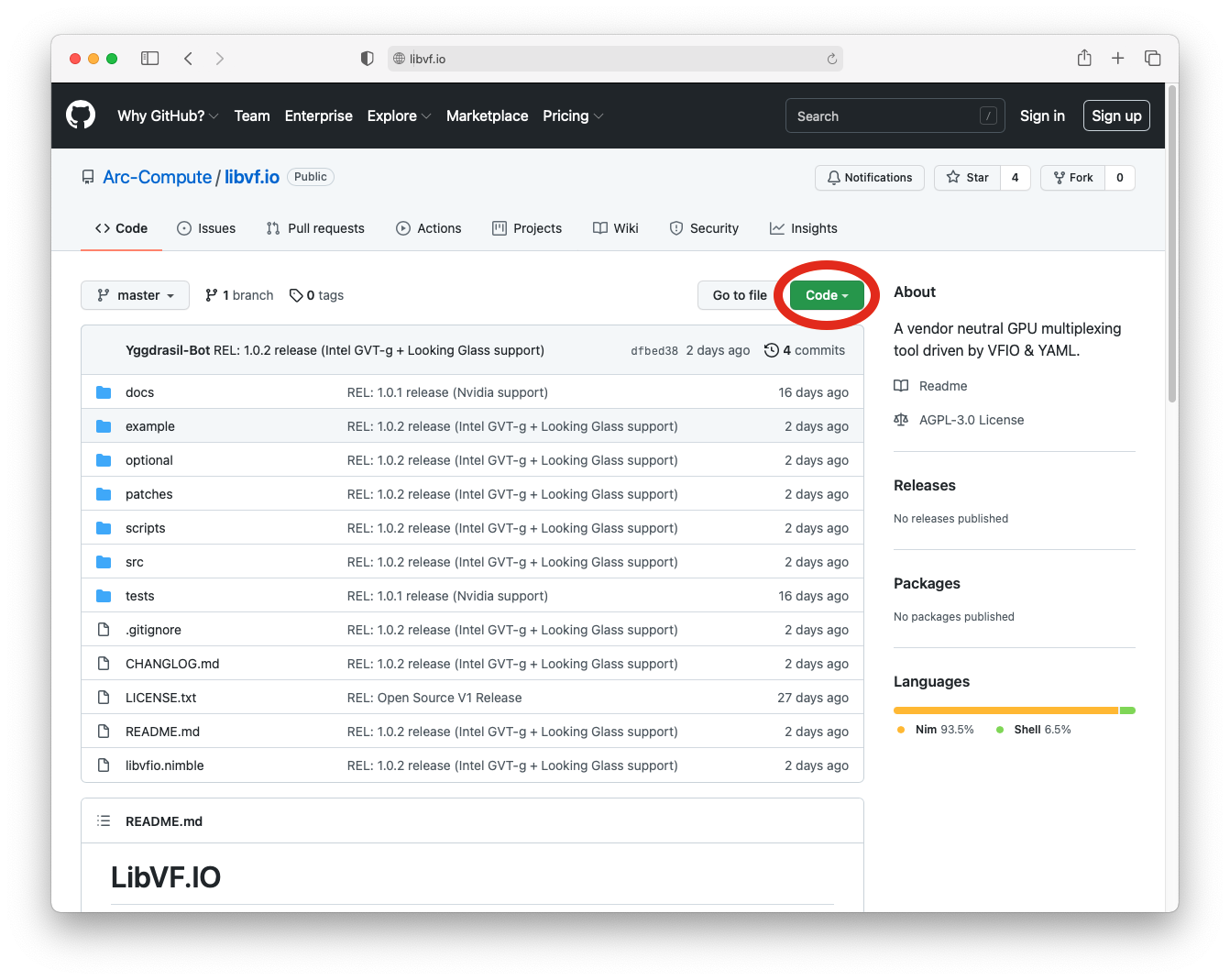

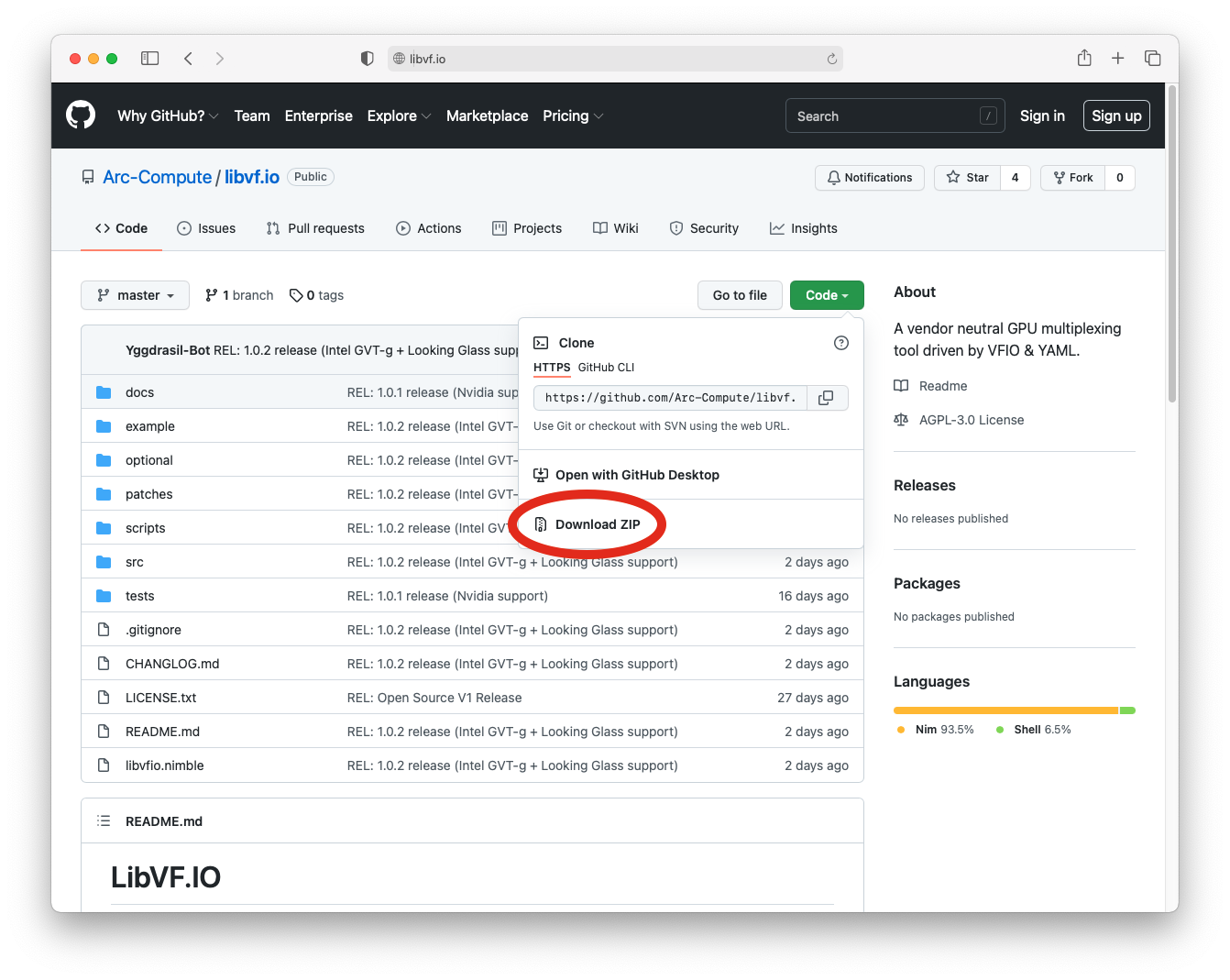

Once you've installed your host operating system (Ubuntu 21.04 Desktop) next go to https://libvf.io and clone our repo.

It's okay if you haven't used git before. You can download the files as a zip.

Once you've downloaded the zip file you'll want to extract it to a working directory on your Ubuntu 21.04 Desktop system.

5.1.3 Windows 10 ISO

You'll also need an installer ISO for a Windows 10 virtual machine. We recommend Windows 10 LTSC for the best experience due to it's more predictable updates but this will work with any version of Windows 10. Depending on where you're downloading the .iso file from you may have several options. If you see x64, AMD64, or x86_64 pick that file - all of these mean it's the 64-bit version which is the one you want. You will need a valid Windows 10 license to install your virtual machine.

5.1.4 Host Mdev GPU Driver

Check the feature matrix on OpenMdev to determine if your hardware is supported then pick the driver matching your graphics vendor.

Nvidia Merged Driver

Note to users of existing OS installs: If you are not running this setup on a fresh operating system install and have installed Nvidia's proprietary driver package please make sure to uninstall your existing Nvidia drivers before attempting to install LibVF.IO.

Note to users of consumer Ampere GPUs: Right now support is in development however it is not yet considered stable.

To make use of the latest drivers download all 3 .run files linked in this repository under section 1. then place them inside the libvf.io /optional/ directory before running the installer script. During installation the merged driver will be automatically generated and installed.

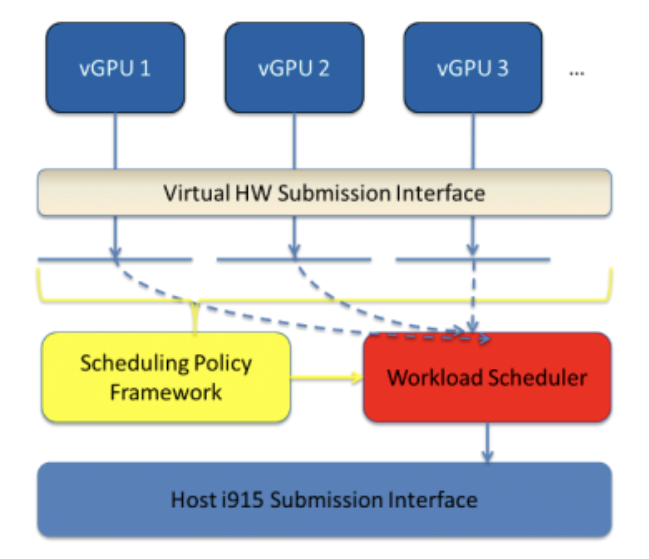

Intel i915 Driver

Instructions on the installation of Intel's bleeding edge virtualization drivers can be found here for use with 6th to 9th generation silicon (GVT-g).

SR-IOV APIs on 11th & 12th generation Intel Xe graphics has not yet been documented in the i915 driver API making support more difficult. Source code for i915 SR-IOV is available here. If you would like to engage with Intel's GPU driver developers you can join the Intel-GFX mailing list here.

AMD GPU-IOV Module Driver

AMD's GPU-IOV Module (GIM) can be downloaded and compiled from here. It is possible to run this code on a limited number of commodity GPU devices which may be modified to enable the relevant APIs. You can read more about the modification process here. While this approach is entirely workable today, there are several downsides to AMD GPU devices for use with virtualization. Those reasons are as follows:

- The latest AMD GPUs that this software runs on are AMD's Tonga architecture S7150 and W7100 which were end of life (EOL) in 2017.

- AMD has since produced other SR-IOV capable GPUs but neither binaries nor source code has been released to support this hardware.

- AMD provides no support for their existing open source code. In order to use their code you will need to checkout pull request 24 which makes GPU-IOV Module usable on modern kernel versions. You can see the relevant pull request link here.

It is for these reasons that we do not recommend the use of AMD GPU devices for virtualization purposes at this time.

It may be possible to improve support for AMD GPU devices through amdgpu driver integration with GPU Virtual Machine (GVM). The first step towards accomplishing this could be undertaken by members of the OpenMdev community independently or in collaboration with amdgpu driver developers via improving documentation for the amdgpu driver's APIs on this AMD-specific OpenMdev page so that it documents functions relevant to graphics mediation with similar comprehensiveness to the equivalent Nvidia-specific OpenMdev page on Nvidia's Open Kernel Modules (OpenRM driver).

5.2 Run the install script & reboot

Before running the install script you'll want to ensure your motherboard is configured correctly. Most motherboards ship with appropriate defaults but take a moment to double check.

Reboot your computer into your UEFI and turn on the settings as shown on the OpenMdev CPU support page. It's okay if not all of the settings shown appear in your UEFI as that often means they're set correctly by default.

Now that you've installed your host OS, configured your UEFI, and have downloaded the appropriate GPU driver files you can now run the install script.

First open a terminal on your freshly installed Ubuntu 21.04 Desktop host system and generate an SSH key. Run the following command and follow the on-screen prompts to generate your key:

$ssh-keygen

Now navigate to the libvf.io directory you downloaded earlier.

Run the following command from within the libvf.io directory (don't change directory into the scripts directory):

$ ./scripts/install-libvfio.sh

If you are using a system with an Nvidia based GPU and have placed the optional driver files in the libvf.io /optional/ directory then the installation script will prompt you to reboot your system after it has disabled Ubuntu's default Nouveau GPU driver. After you have restarted your system you'll notice that the screen resolution will be reduced - don't worry, that's part of the installation process.

Now that you've rebooted your machine go back to the libvf.io directory and run the same command again:

$ ./scripts/install-libvfio.sh

This will continue the installation process from where you left off.

If you are using an Nvidia GPU you should have placed the merged driver file .run inside of the libvf.io /optional/ directory. Provided you have done that the install script will now automatically install this driver and sign the module for you.

You will be asked if you would like to auto-merge drivers.

Follow these steps as they are presented to you:

- When asked if you would like to auto-merge drivers (y/n) press "y" on your keyboard and press enter. This will install your driver.

- During the driver installation you may see your display resolution change and be presented with your computer's lock screen. If this occurs you should log back in to continue the installation.

- When you are asked if you would like to install GPU Virtual Machine (GVM) components (y/n) press "y" on your keyboard and press enter.

You can now reboot your computer.

Now that you've rebooted you're ready to setup your Windows VM!

5.3 Setting up your first VM

The following section will touch on the process of starting your first GPU accelerated VM with LibVF.IO.

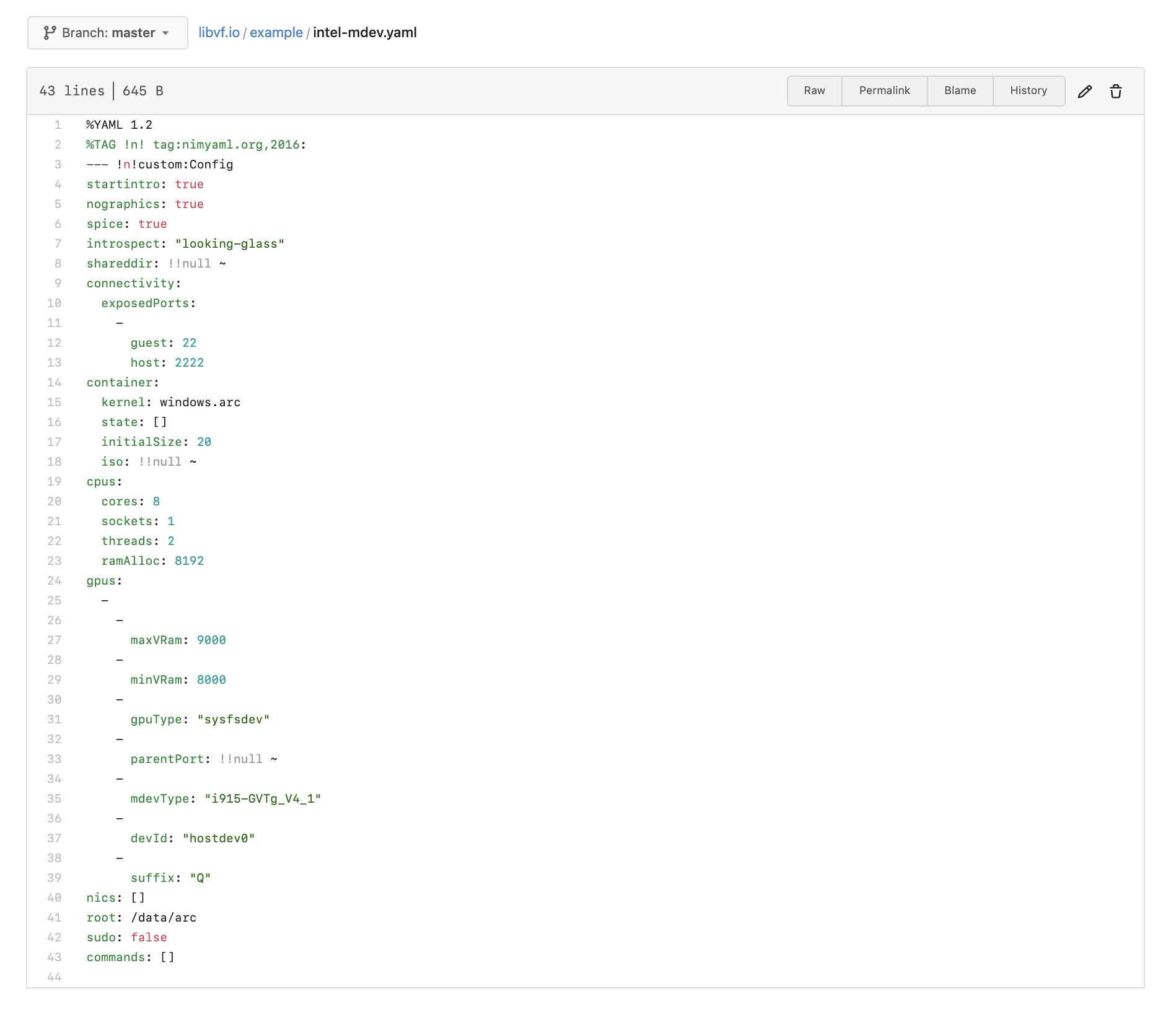

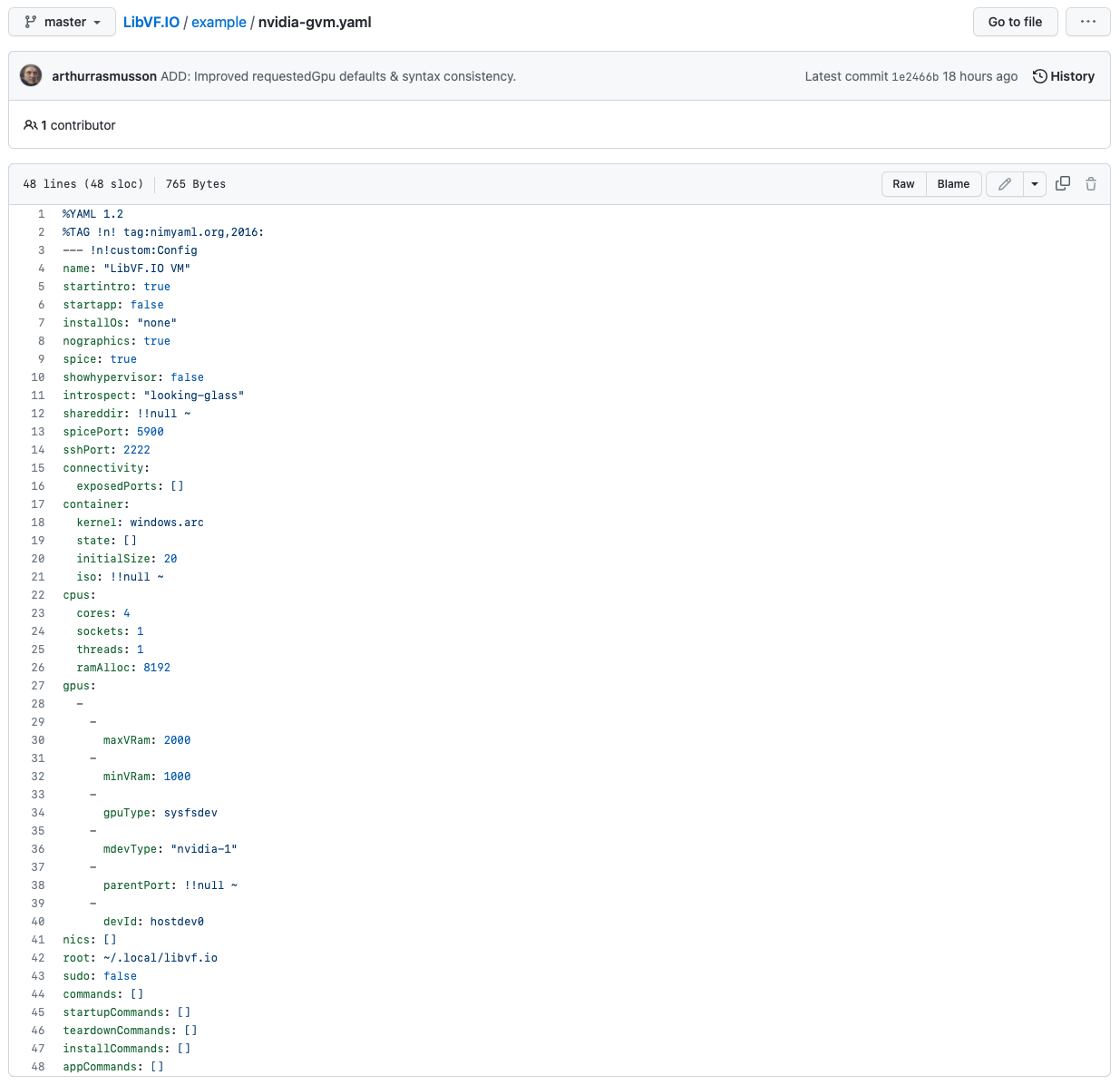

You can begin by copying a template .yaml file from the example folder inside of the LibVF.IO repository. If you're using GPU Virtual Machine (GVM) you should select a .yaml file which ends with -gvm.yaml. You can see some of the example YAML files below:

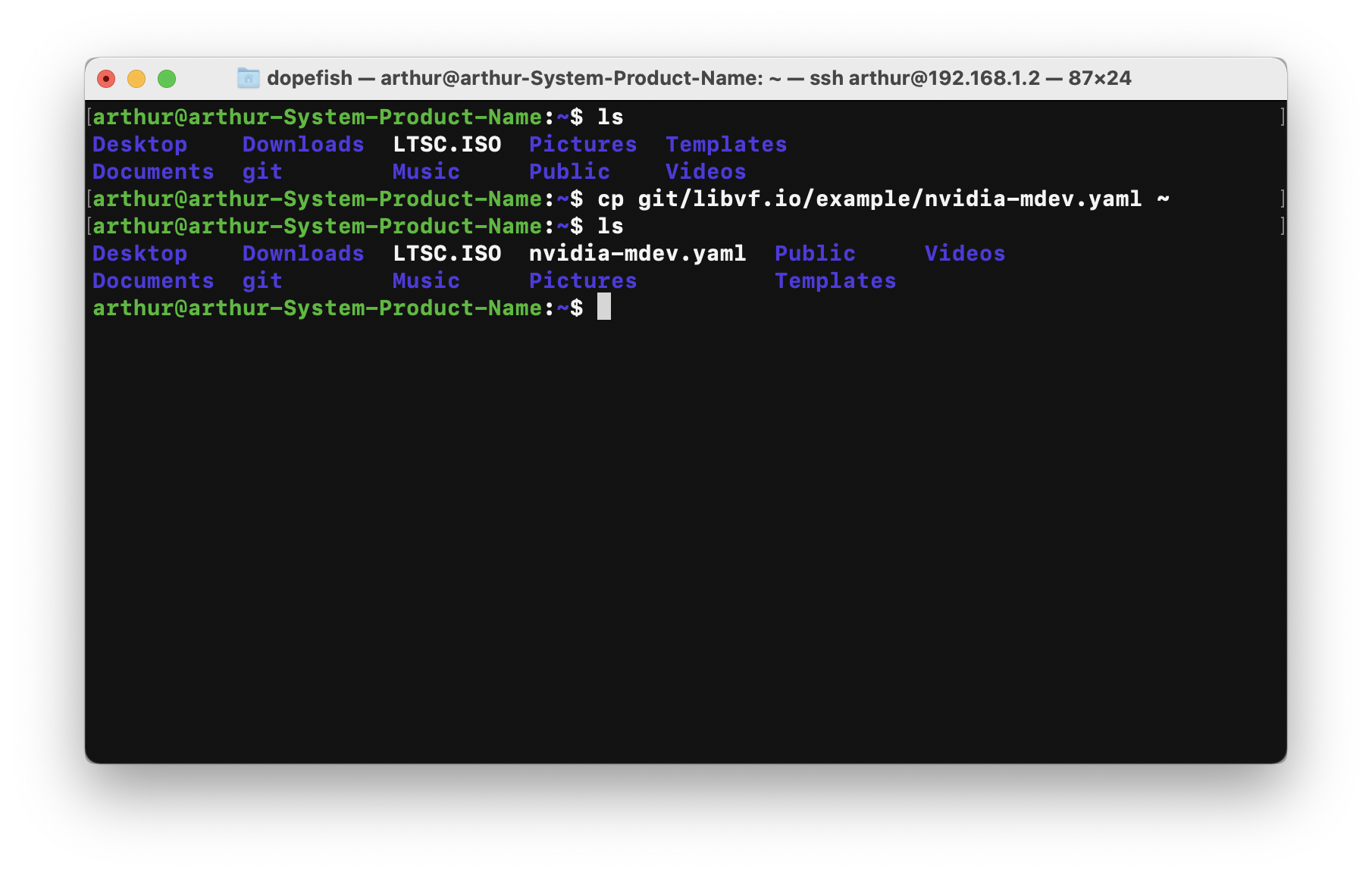

Once you've selected an appropriate template yaml file you can copy it to anywhere that's convenient for you to work with. In this example we copied it to our home folder where we also have the Windows installer .ISO file:

If you are not a GVM user inside the -mdev.yaml file you should change the maxVRam: and minVRam: values to reflect your desired virtual GPU (vGPU) guest size. These properties select an available virtual GPU (vGPU) mediated type within the range of your chosen VRAM size values if a mediated device type of that size exists.

Note to non-GVM users: The default values of minVRam: 1000 & maxVRam: 2000 should ideally not be adjusted during setup as larger sizes can sometimes interfere with installation. Once you've finished following this guide and your VM setup is running stable you can incrementally increase these values to find a minVRam & maxVRam size that works well for your workloads and hardware.

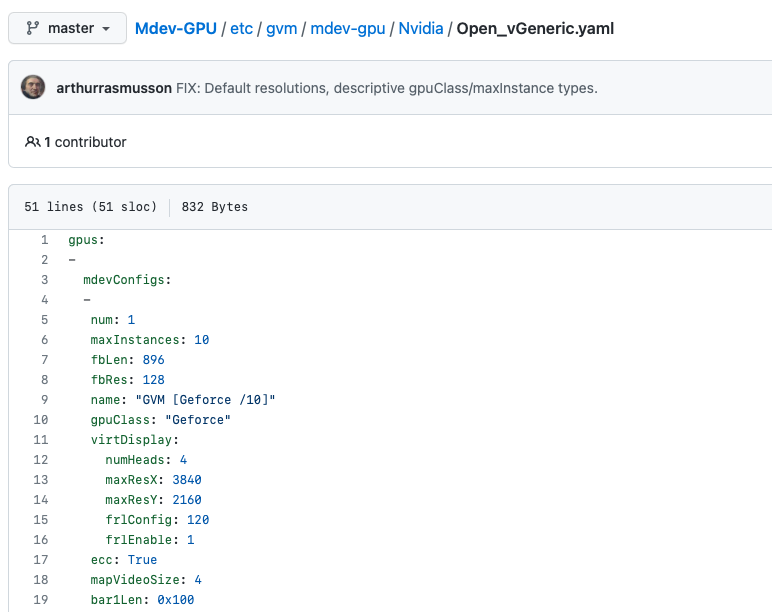

If you are a GPU Virtual Machine (GVM) user you can make arbitrary changes to your available mediated device topologies. These configuration options can be adjusted within the directory /etc/gvm/mdev-gpu/generate-vgpu-types.yaml. To learn more about arbitrary changes to the virtual GPU (vGPU) topologies you can read the Mdev-GPU API page on OpenMdev.

Note to GVM users: The fbLen (frame buffer length/VRAM) & fbRes (frame buffer reserve/hypervisor reserved memory) values control your virtual GPU (vGPU) VRAM size directly by creating mediated GPU types (vGPUs) exactly matching your user specified GVM configuration rather than selecting a vendor driver controlled mediated type within the size range specified by the fields minVRam & maxVRam. fbLen & fbRes should ideally not be adjusted during setup as larger sizes can sometimes interfere with installation. Once you've finished following this guide and your VM setup is running stable you can incrementally increase these values to find a fbLen & fbRes size that works well for your workloads and hardware. In our testing fbRes appears to scale best at (1/7th) the size of fbLen.

5.3.1 Install your Windows guest

Now you'll need to install your base Windows virtual machine. Follow the steps detailed in this section to do so.

To start your VM installation you'll want to pick the size of your VM guest. For example to create a 100 gigabyte VM you can do this using the following command:

$arcd create /path/to/your/file.yaml /path/to/your/windows.iso 100

Once your VM has shutdown the virtual disk image will be converted - wait for that to finish and proceed to step 5.3.2.

5.3.2 Install the guest utils

After you've finished installing your Windows VM you should now shutdown your VM and restart it with the guest utilities installer.

You can start with the attached the guest utilities installer using the following command:

$arcd start /path/to/your/yaml/file.yaml --preinstall

Once you've logged into Windows open up the attached virtual CD-ROM.

From within the CD-ROM right click on start-install.bat then click "Run as Administrator". During this process drivers will be loaded and you'll need to click "allow" several times on dialog boxes to allow Windows to use them. Make sure not to close any of the windows during this process unless they prompt you do to so.

When the automatic guest installation is complete all of the Windows will have automatically closed or have prompted you to close them.

5.3.3 Install the guest GPU driver

Before starting your VM with Looking Glass you should ensure that Windows has automatically detected your GPU and installed the appropriate driver version for you. On Windows 10 LTSC using an Nvidia GPU usually if you leave the VM alone for a few minutes after you install it you'll come back to find Windows Update has installed your GPU drivers for you automatically. If it hasn't installed automatically you should find a consumer GPU driver version online which matches or is older than your host GPU driver version. Nvidia users of GVM have successfully tested driver version 512 in Windows guests. In some cases such as on AMD GPU devices a version newer on the guest will not be impacted by an older version on the host however with other driver vendors this may not be the case.

5.3.4 Run your VM

Once you've started your VM make sure you set your Windows audio device to "Speakers (Scream (WDM))".

Now that you've updated your .yaml file you can simply run your VM with full graphics performance by using the following command:

$arcd start /path/to/your/yaml/file.yaml

If at any time you need to debug your setup you can use the --safe-mode and/or --disable-gpu flags in order to start your VM using legacy graphics mode.

6 Definitions

This section will attempt to define some of the terms that are either related to the content of this article or were used throughout this article for those who may need more context.

6.1 VFIO

Kernel.org defines VFIO or Virtual Function Input Output as "an IOMMU/device agnostic framework for exposing direct device access to userspace, in a secure, IOMMU protected environment." [source]

6.2 Mdev

Kernel.org defines Virtual Function I/O (VFIO) Mediated devices as: "an IOMMU/device-agnostic framework for exposing direct device access to user space in a secure, IOMMU-protected environment... mediated core driver provides a common interface for mediated device management that can be used by drivers of different devices." [source]

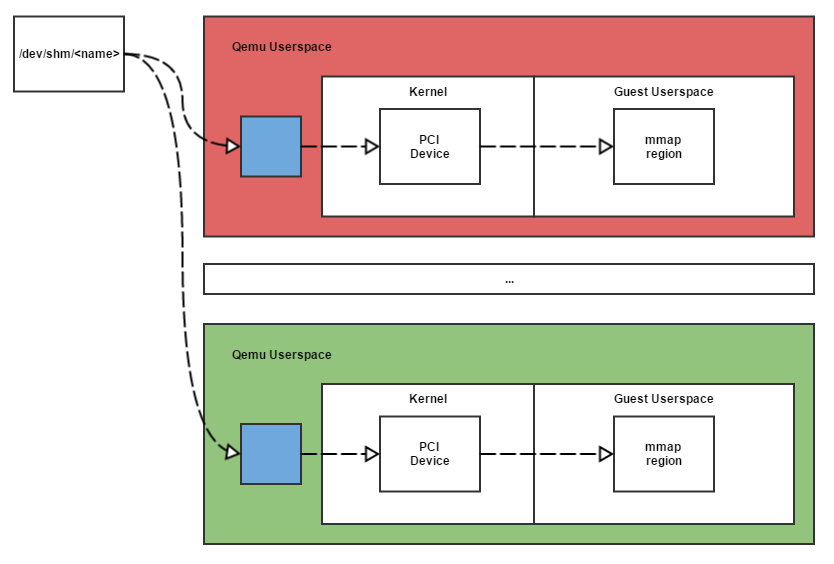

6.3 IVSHMEM

According to QEMU's GitHub repo: "The Inter-VM shared memory device (ivshmem) is designed to share a memory region between multiple QEMU processes running different guests and the host. In order for all guests to be able to pick up the shared memory area, it is modeled by QEMU as a PCI device exposing said memory to the guest as a PCI BAR." [source]

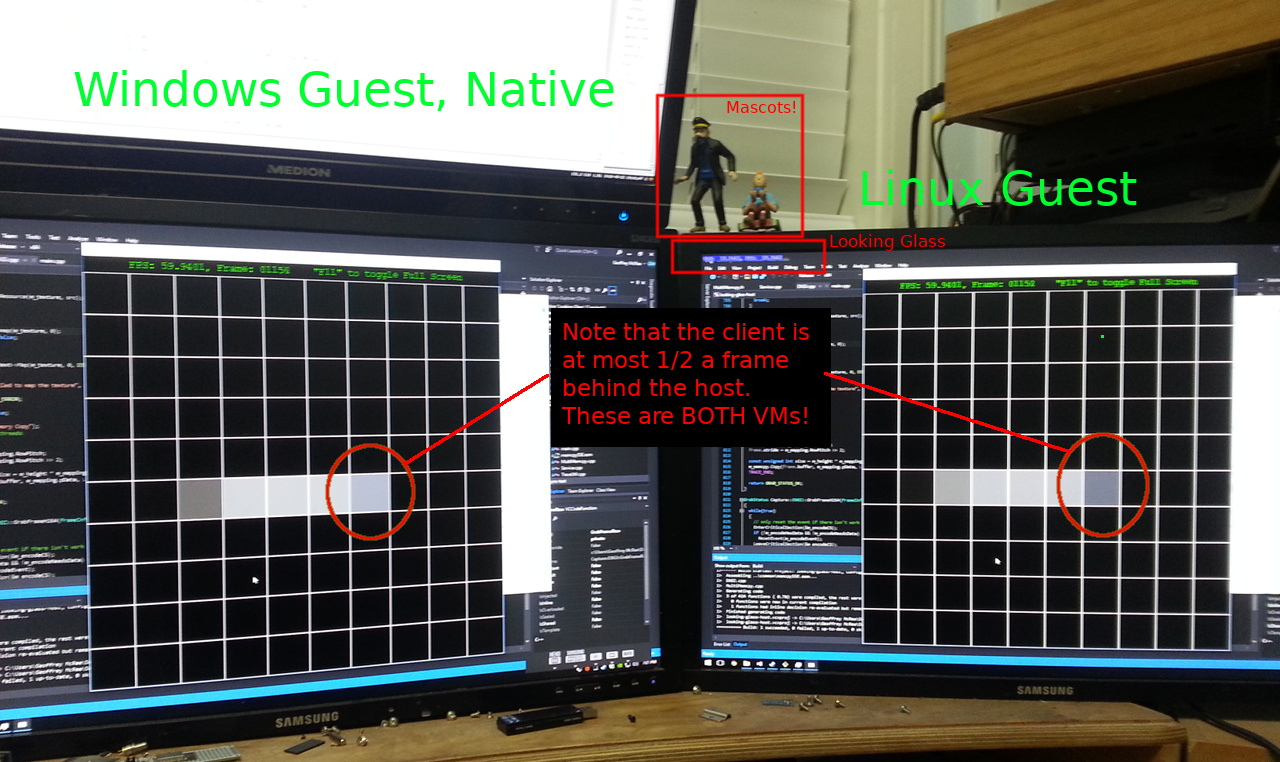

6.4 KVMFR (Looking Glass)

looking-glass.io defines Looking Glass as: "an open source application that allows the use of a KVM (Kernel-based Virtual Machine) configured for VGA PCI Pass-through without an attached physical monitor, keyboard or mouse." [source]

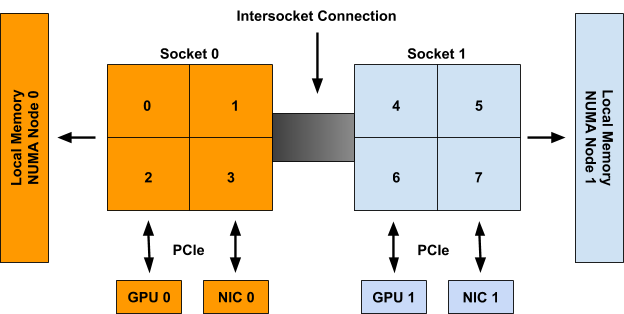

6.5 NUMA Node

Infogalactic defines NUMA as: "Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors)." [source]

6.6 Application Binary Interface (ABI)

Infogalactic defines an ABI as "the interface between two program modules, one of which is often a library or operating system, at the level of machine code." [source]

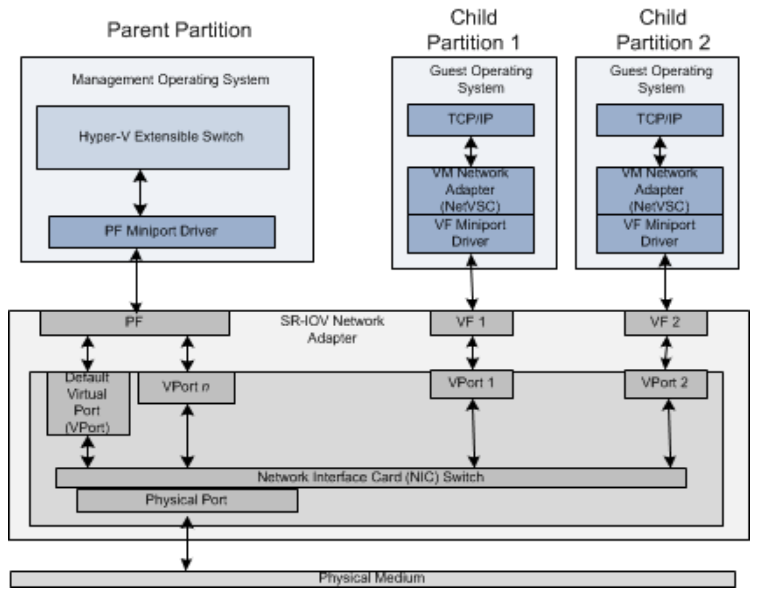

6.7 SR-IOV

docs.microsoft.com defines SR-IOV as "The single root I/O virtualization (SR-IOV) interface is an extension to the PCI Express (PCIe) specification. SR-IOV allows a device, such as a network adapter, to separate access to its resources among various PCIe hardware functions." [source]

6.8 GVT-g

wiki.archlinux.org defines Intel GVT-g as "a technology that provides mediated device passthrough for Intel GPUs (Broadwell and newer). It can be used to virtualize the GPU for multiple guest virtual machines, effectively providing near-native graphics performance in the virtual machine and still letting your host use the virtualized GPU normally." [source]

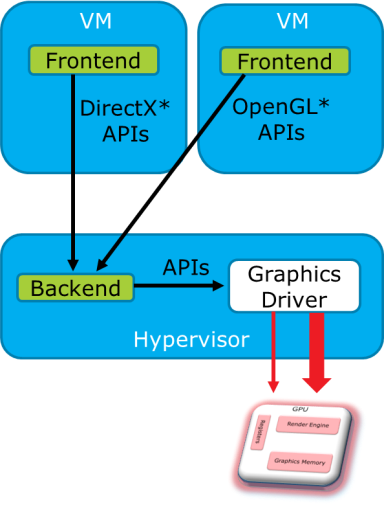

6.9 VirGL

lwn.net defines Virgl as: "a way for guests running in a virtual machine (VM) to access the host GPU using OpenGL and other APIs... The virgl stack consists of an application running in the guest that sends OpenGL to the Mesa virgl driver, which uses the virtio-gpu driver in the guest kernel to communicate with QEMU on the host." [source]

VirGL + VirtIO-GPU have provided a robust way to run graphics instructions on hardware platforms incapable of running mediated device drivers either due to artificial vendor imposed firmware limitations on commodity GPU hardware (AMD GPU-IOV Module) or due to vendor imposed driver limitations (Nvidia GRID) where it will continue to remain relevant. Unfortunately this approach incurs a substantial performance penalty compared to VFIO passthrough.

7 Contributing to LibVF.IO

If you'd like to participate in the development of LibVF.IO you can send us a pull request or submit issues on our GitHub repo. We'd love help right now further improving documentation, setup automation with support for more host operating systems, and automation for setup on Intel GVT-g capable hardware.

8 Related Projects & Resources

The following section will provide links to related projects and resources.

Useful Tools

GPU Virtual Machine (GVM)

A GPU Virtual Machine for IOMMU-capable computers such as x86_64 & ARM.

Looking Glass

An extremely low latency KVMFR (KVM FrameRelay) implementation for guests with VGA PCI Passthrough

Intel GVT-g

Intel's open Graphics Virtualization Technology.

AMD GIM

AMD's GPU-IOV Module for MxGPU capable devices

vGPU_Unlock

Unlock vGPU functionality for consumer-grade Nvidia GPUs.

Guides & Wikis

Level1Techs Forum [VFIO Topic]

Ground zero for VFIO enthusiasts.

The best resource available on setup and use of KVM Frame Relay.

Intel GVT-g Setup Guide

Intel's official guide on setting up GVT-g. (caution: kernel recompilation required)

SR-IOV Modding AMD's W7100

A guide detailing steps involved in unlocking vGPU functionality on AMD hardware.

vGPU_Unlock Wiki

A helpful guide on driver setup for Nvidia consumer hardware.

If you have any comments, recommendations, or questions pertaining to any of the above material my contact information is as follows:

Arthur Rasmusson can be reached at

twitter.com/arcvrarthur on Twitter

and by email at arthur@arccompute.com