The Engine of HPC

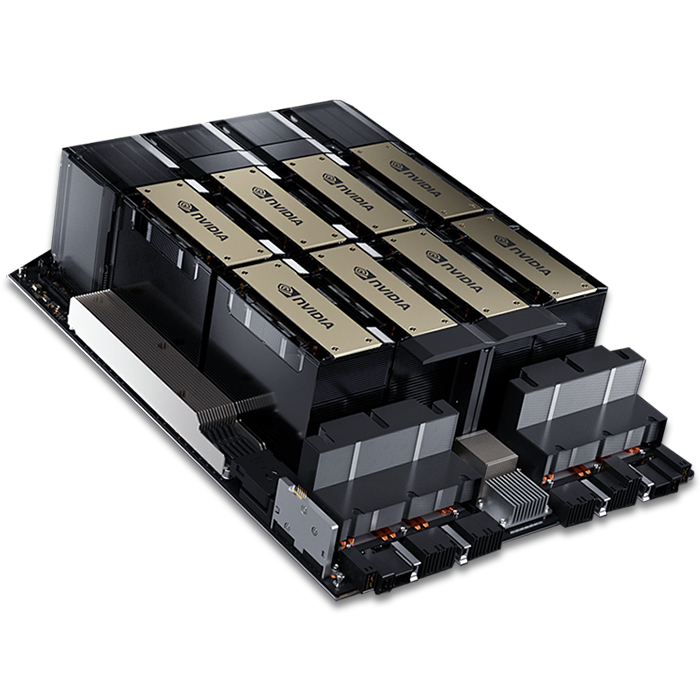

GPUs are the primary engines in the ever-evolving landscape of high-performance computing (HPC), powering everything from 3D simulations to artificial intelligence using intricate mathematical operations. Those working closely with GPUs understand that a fundamental challenge in harnessing them effectively is efficiently executing the complex interplay of threads while managing memory bandwidth.

Low-level Optimization

Arc Compute’s pioneering research highlights the significant benefits of running concurrent processes, taking advantage of opportunities to execute additional arithmetic operations on GPU performance during memory access cycles. Innovations in low-level GPU task management defy the conventional isolation of application/task execution, facilitating optimized pipelines and bandwidth without sacrificing performance.

Adhering to Amdahl’s Law and Gustafson’s Law, Arc Compute minimizes compute times through low-level optimization points, mitigating latencies created in memory access times by thread divergence and “cold” SM cores. A strategic pairing of compute-bound and memory-bound workloads that doesn't over-saturate pipelines is at the core of these GPU performance optimizations, involving meticulous orchestration of task execution and pipeline utilization.

Continuous Development

As GPU architectures continue to evolve, the ongoing development of optimization strategies is crucial. Leading this effort, Arc Compute is enabling adaptability for all future GPU architectures. Join us on this journey to redefine efficiency benchmarks, blending innovation and technical expertise in the HPC space.

Pipeline Optimization: Arc Compute delves into low-level GPU task management, saturating pipelines by task matching to ensure seamless task processing and efficient data transmission.

Amdahl’s Law: A formula used to find the maximum possible improvement by only improving a particular part of a system. It is often used in parallel computing to predict the theoretical speedup while utilizing multiple processors.

Gustafson’s Law: A principle in parallel computing that addresses the issue of scalability in parallel systems. As the number of processors increases, the overall computational workload can be increased proportionally to maintain constant efficiency.